I migrated all my tweets, here's how

On how I reclaimed my tweets and imported them into my personal website

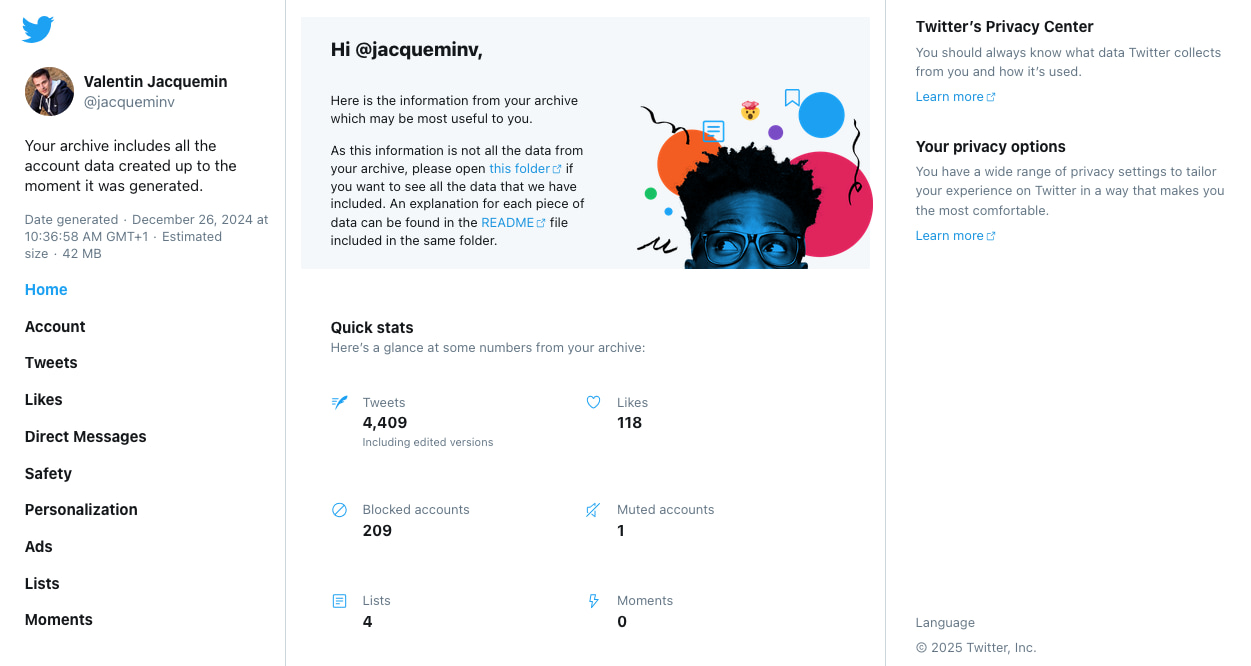

About a year ago, I closed my twitter account. Before pulling the trigger though, I’ve exported my personal content as I knew that I did not want to loose all that history of my online presence.

Funnily, that export feature still matches the Twitter branding, the guys from X did not bother taking care of that.

I got in my mailbox a fully functioning static website. Where I could browse my own feed as if Twitter still existed. You can search, filter and sort the feed as you please, all locally. The web as I like it.

I did not know what I would do with that archive a year ago. I just wanted to close my account without loosing my content. As I decided to resume my personal website, I knew that my tweets would get a new home in there.

Here’s how I migrated that content.

When you browse the archive you quickly find where to go. And as it’s a static website anyway, you can figure out how the whole thing works by looking to the sources.

twitter-archive> tree -P '*.html' -L2

.

├── assets

│ ├── fonts

│ ├── images

│ └── js

├── data

│ ├── community_tweet_media

│ ├── deleted_tweets_media

│ ├── direct_messages_group_media

│ ├── direct_messages_media

│ ├── moments_media

│ ├── moments_tweets_media

│ ├── profile_media

│ ├── tweets_media

│ └── tweets.js

└── Your archive.html

The entire collection of tweets is in app/tweets.js. From there to my own

website, it’s a matter of using a few tools to filter and re-shape that array

of microblog entries.

First, I simply kept the json compatible part of app/tweets.js, i.e. I

dropped the javascript variable declaration. I saved that into preprocess1.json.

Second, I decided to filter out any replies as I just wanted to keep my own posts. That would be too much of work to bring replies into their context, not even sure that it would be feasible at all.

jq '[.[] | .tweet.id as $id

| ( .tweet.entities.media | length > 0 ) as $hasMedia

| select(IN(paths; ["tweet", "in_reply_to_user_id"])==false)

| del(

.tweet.extended_entities,

.tweet.favorite_count,

.tweet.id_str,

.tweet.truncated,

.tweet.retweet_count,

.tweet.id,

.tweet.possibly_sensitive,

.tweet.favorited,

.tweet.entities.user_mentions,

.tweet.entities.symbols,

.tweet.entities.hashtags,

.tweet.retweeted,

.tweet.edit_info,

.tweet.source,

.tweet.display_text_range)

| .tweet.entities.media

|= if $hasMedia then $id end]' preprocess.1.json > preprocess.2.json

That filtered out about a third of my tweets.

Then I re-shaped the tweets. For example, the json payload gives the expanded URLs of any shortened link present in a tweet:

{

"tweet" : {

"entities" : {

"urls" : [

{

"url" : "https://t.co/FhmBWq39rL",

"expanded_url" : "https://activitypub.rocks/",

"indices" : [

"178",

"201"

]

}

]

},

"created_at" : "Fri Nov 01 11:54:07 +0000 2024",

"full_text" : "\"Don't you miss the days when the web really was the world's greatest decentralized network? Before everything got locked down into a handful of walled gardens? So do we.\" – https://t.co/FhmBWq39rL",

"lang" : "en"

}

},

I definitely don’t want to use the twitter URL shortener anymore. Thanks to that thorough payload, it was easy for me to translate them.

A multipass of the following jq script would convert all the shortened URLs into their expanded version.

cat preprocess.2.json | jq '[.[]

| .tweet.entities.urls[0]?.url as $url

| .tweet.entities.urls[0]?.expanded_url as $expanded_url

| (if $url then .tweet.full_text

| sub($url; "<a href=" + $expanded_url + ">" + $expanded_url + "</a>")

else .tweet.full_text end) as $text

| { tweet: {

full_text: $text,

lang: .tweet.lang,

created_at: .tweet.created_at,

entities: {

urls: .tweet.entities.urls[1:],

media: .tweet.entities.media

}

}}]' > preprocess.3.json

Once the content reshaped, I created one file per tweet under the notes section of my hugo website. I’ve used a bash script for that:

#!/usr/bin/env sh

file='preprocess.5.json'

length=$(cat "$file" | jq '. | length')

i_tweet=1

for (( i=0; i<length; i++ ))

do

created_at=$( jq -r ".[$i] | .date | todate" "$file")

output_folder="output/tweets/$(( i_tweet++ ))"

out="$output_folder/index.md"

hasMedia=$( jq ".[$i] | if .tweet.media then true else false end" "$file" )

lang=$( jq -r ".[$i] | .tweet.lang" "$file")

content=$( jq -r ".[$i] | .tweet.full_text" "$file")

template='tweet.pre'

if $hasMedia = "true" ; then

template='tweet-with-media.pre'

filename=$( jq -r ".[$i] | .tweet.media" $file)

media=$(basename $(ls tweets_media/"$filename"*))

if [[ "$media" == *.mp4 ]]; then

mediaHtml="<video autoplay loop src='/notes/$media' class='u-video'></video>"

else

mediaHtml="<img src='/notes/$media' class='u-photo' />"

fi

fi

data=$( m4 -D__CREATED_AT__="${created_at}" \

-D__LANG__="$lang" \

-D__CONTENT__="$content" \

-D__MEDIA__="$mediaHtml" \

$template)

mkdir -p "$output_folder"

echo "$data" > $out

done

That uses m4 too, simply to keep the templating outside, just a bit easier to

manage.

Here’s for example how I prepared tweet.pre. The bash script would either use

this one or tweet-with-media.pre depending on the presence or not of a media

in the tweet.

changequote(<!, !>)

---

date: __CREATED_AT__

lang: __LANG__

params:

kind: tweet

---

<p>__CONTENT__</p>

<p class="meta original-link">

First published on <a href="https://twitter.com">twitter</a>

(<a href="/articles/1/">?</a>)

</p>

That’s it! 3143 tweets kept intact, now persisted on my own website.